Research Activities

Dr Tarek Taha currently leads the Robotics Lab at Dubai Future Foundation. He has more than 15 years of experience in the fields of robotics, autonomous

systems and artificial intelligence while working for both, industry and academia.

Having worked extensively in the robotics, autonomous systems and artificial intelligence domains, I have developed great interest in advancing the state of

robotics and autonomous systems to enable their deployment in practical applications.

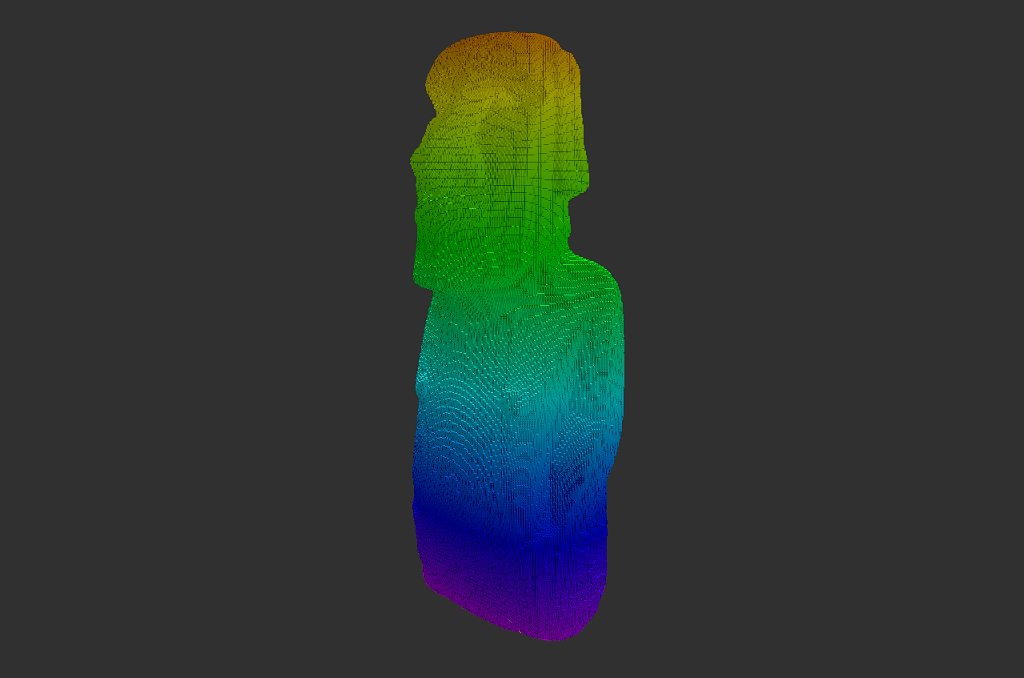

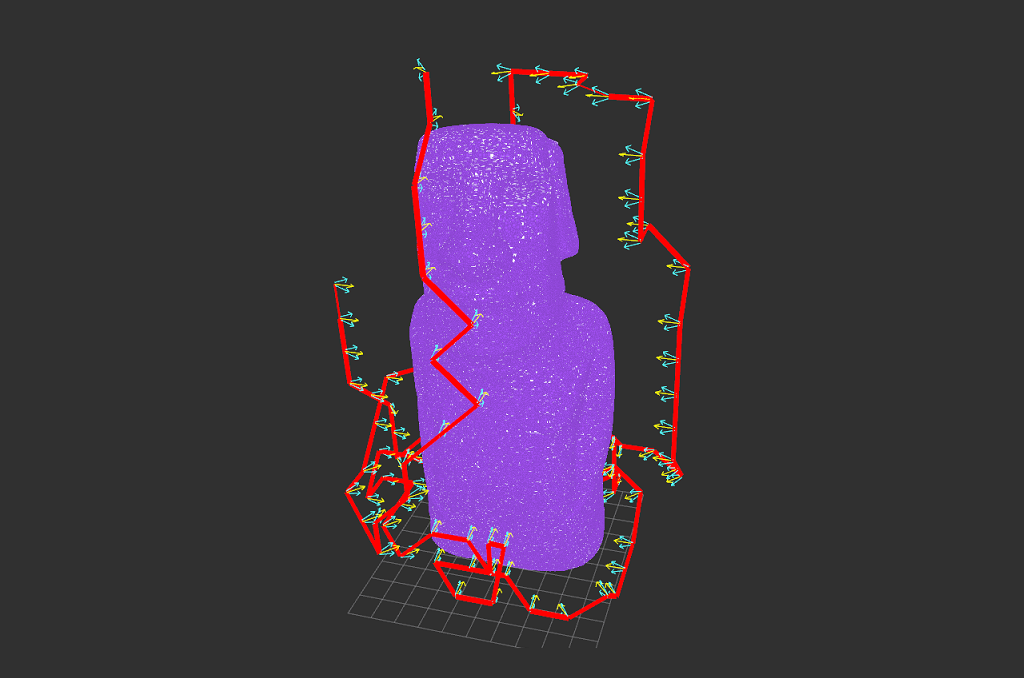

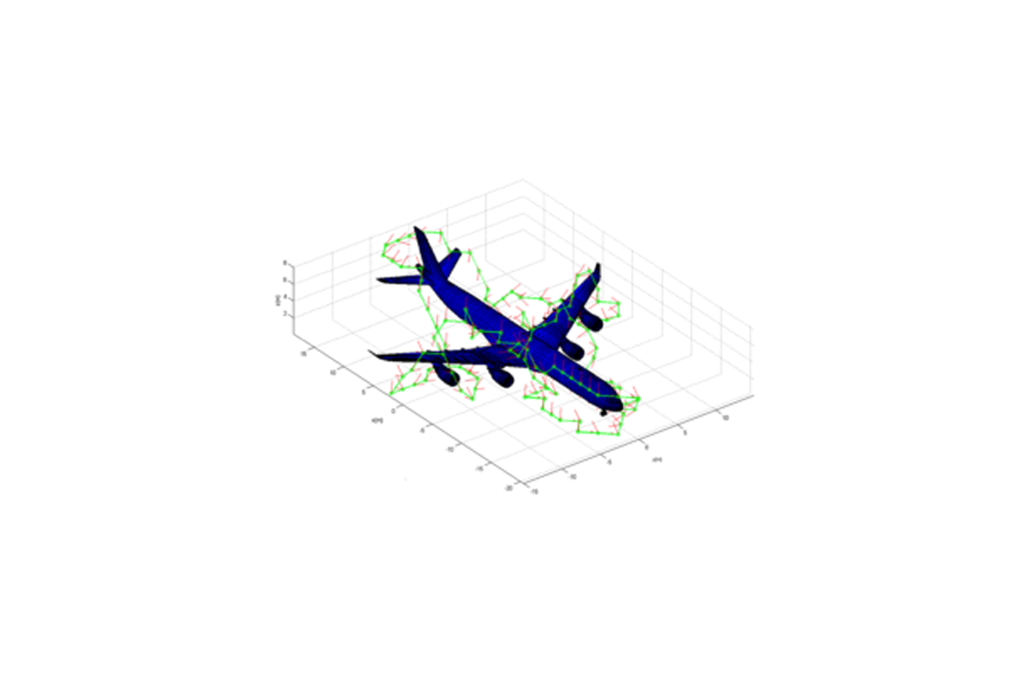

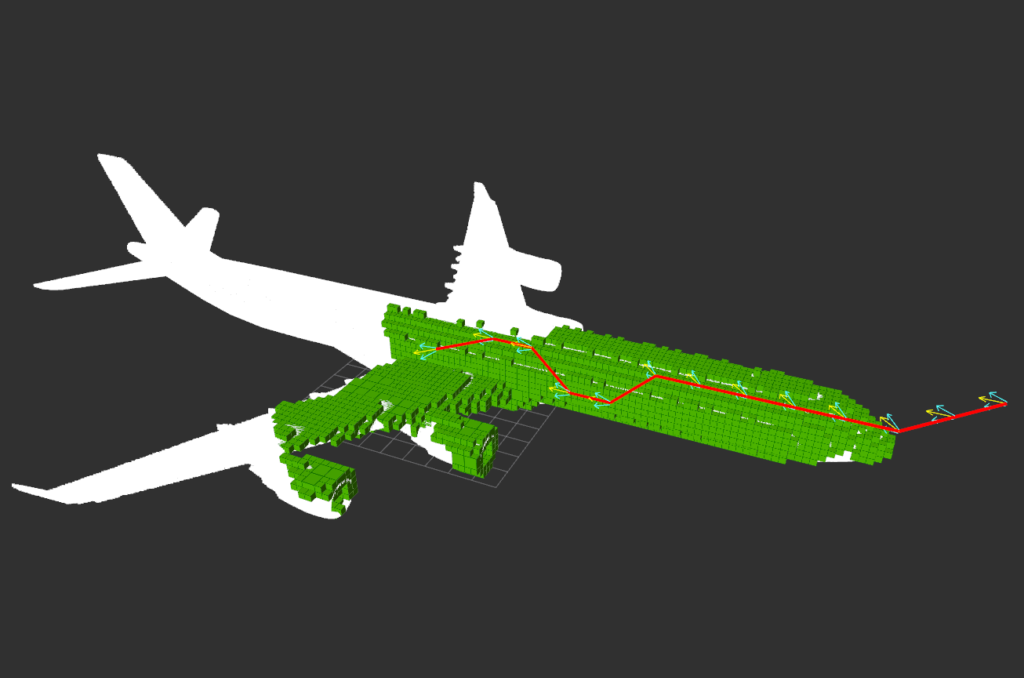

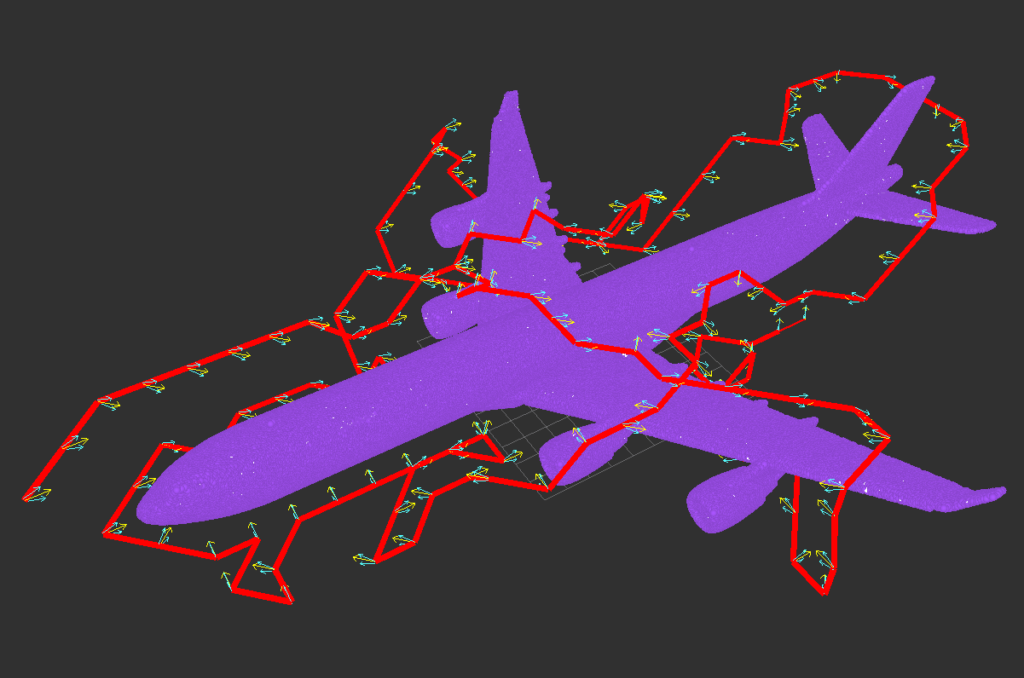

Coverage Path Planning

Collaborators

- Prof. Yahya Zweiri

- Prof. Lakmal Seneviratne

- Prof. Jorge Dias

Students

- Randa Almadhoun

- Abdullah Abduldayem

Relevant Publications

- A survey on multi-robot coverage path planning for model reconstruction and mapping

- Coverage Path Planning for Complex Structures Inspection Using Unmanned Aerial Vehicle (UAV)

- Guided Next Best View for 3D Reconstruction of Large Complex Structures

- Coverage Path Planning with Adaptive Viewpoint Sampling to Construct 3D Models of Complex Structures for the Purpose of Inspection

- Aircraft Inspection Using Unmanned Aerial Vehicles

- GPU accelerated coverage path planning optimized for accuracy in robotic inspection applications

Search and Rescue

Robotic Search and Rescue (RSAR) is a challenging yet promising technology area with the potential of high-impact practical deployment in real-world search and rescue disasters scenarios. Response time in a disaster environments is considered a key factor that requires a careful balance between rapid and safe intervention. SAR response should be fast and rapid in order to maximize the number of detected survivors/victims and locate all sources of danger in a timely manner. Appropriate disaster response should be organized and synchronized in order to save as many victims as possible, the primary objective in search and rescue operations. In general, responders have approximately 48 hours to find trapped survivors, otherwise the likelihood of finding victims alive drops substantially. Moreover, the conditions of SAR sites are usually hazardous making the SAR team more vulnerable, forced to operate in an unstructured environment with limited access to medical supplies, power sources, and other essential tools and utilities.

Collaborators

- Prof. Lakmal Seneviratne

- Prof. Jorge Dias

- Dr. Nawaf Al Moosa

Students

- Hend Al Tair

- Reem Ashour

- Randa Almadhoun

The research work conducted mainly focuses on:

- Exploring indoor environment in order to identify and locate victims using multi-sensors (e.g., electro-optical,thermal, wireless, stereo imaging etc.)

- Coordinating a team of robots and humans during search and rescue missions

- Semantic environment mapping that labels hazards and ranks them

Relevant Publications

- Victim Localization in USAR Scenario Exploiting Multi-Layer Mapping Structure

- Decision making for multi-objective multi-agent search and rescue missions

- Decentralized multi-agent POMDPs framework for humans-robots teamwork coordination in search and rescue

- Exploration for Object Mapping Guided by Environmental Semantics using UAVs

- Decentralized multi-agent POMDPs framework for humans-robots teamwork coordination in search and rescue

UAV based victim Localization

@article{goian2019victim,

title={Victim localization in USAR scenario exploiting multi-layer mapping structure},

author={Goian, Abdulrahman and Ashour, Reem and Ahmad, Ubaid and Taha, Tarek and Almoosa, Nawaf and Seneviratne,

Lakmal}, journal={Remote Sensing},

volume={11},

number={22},

pages={2704},

year={2019},

publisher={Multidisciplinary Digital Publishing Institute}

}

Exploration using Adaptive Grid Sampling

@article{almadhoun2019guided,

title={Guided next best view for 3D reconstruction of large complex structures},

author={Almadhoun, Randa and Abduldayem, Abdullah and Taha, Tarek and Seneviratne, Lakmal and Zweiri, Yahya},

journal={Remote Sensing},

volume={11},

number={20},

pages={2440},

year={2019},

publisher={Multidisciplinary Digital Publishing Institute}

}

Semantic Risk 3D Mapping

Exploration for Semantic 3D Mapping

Unmanned Aerial Vehicles (UAVs)

Various research activities related to UAVs focusing on:

- Fault tolerant UAV systems

- Aerial collaborative transportation

- Energy optimization during aerial missions

- Applications related to search and rescue, and construction site monitoring

Students

- Abdullah Mohiuddin

- Reem Ashour

- Randa Almadhoun

Relevant Publications

- Energy distribution in Dual-UAV collaborative transportation through load sharing

- A Survey of Single and Multi-UAV Aerial Manipulation

- State of the art in tilt-quadrotors, modelling, control and fault recovery

- UAV Payload Transportation via RTDP Based Optimized Velocity Profiles

- Fault Tolerance Control for Quad-Rotor UAV Using Gain-Scheduling in Matlab/Gazebo

- Site inspection drone: A solution for inspecting and regulating construction sites

- First-Principles Modeling of A Miniature Tilt-Rotor Convertiplane in Low-Speed Operation

- A framework of frequency-domain flight dynamics modeling for multi-rotor aerial vehicles

- Victim Localization in USAR Scenario Exploiting Multi-Layer Mapping Structure

- Exploration for Object Mapping Guided by Environmental Semantics using UAVs

Dual UAV collaborative transportation

Multi-UAV co-ordinated payload transport

UAV payload transportation via RTDP

Energy Estimation and distribution

Dual UAV payload transportation using RTDP

Site Inspection Drone

UAV wireless charging and tethering

Next Best View (NBV) with profiling

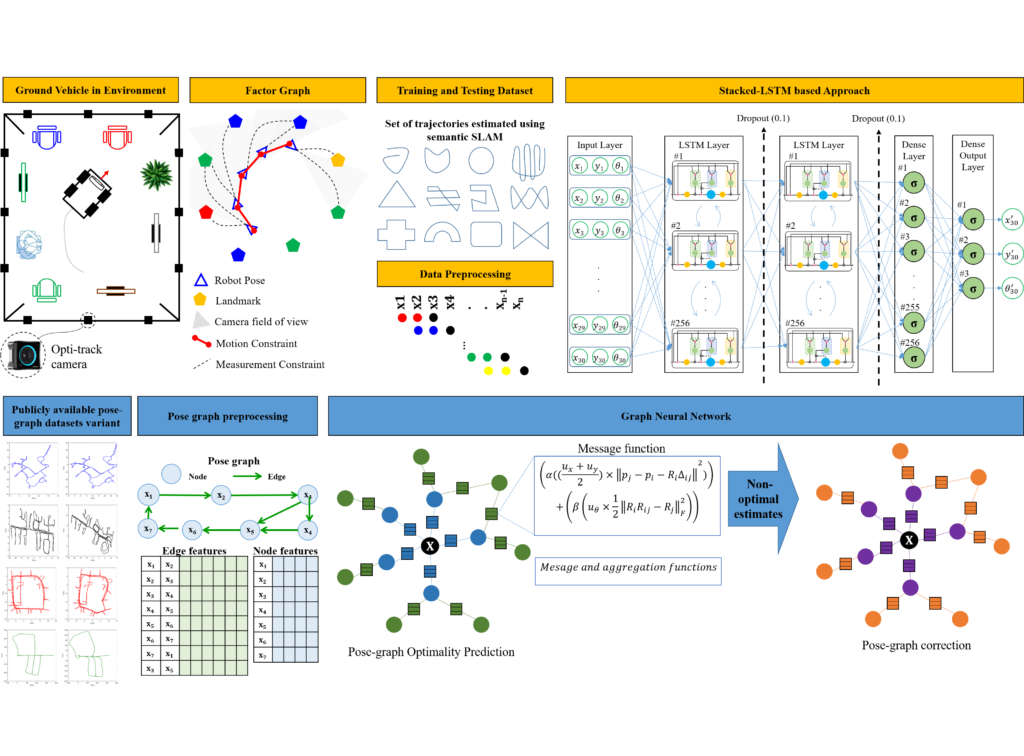

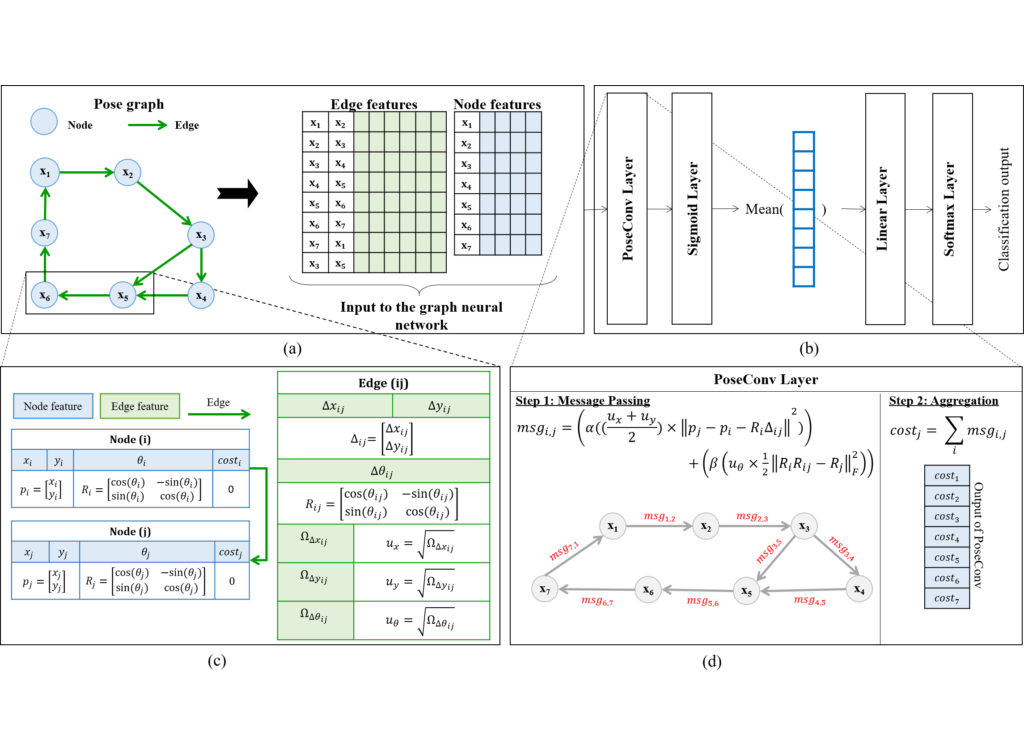

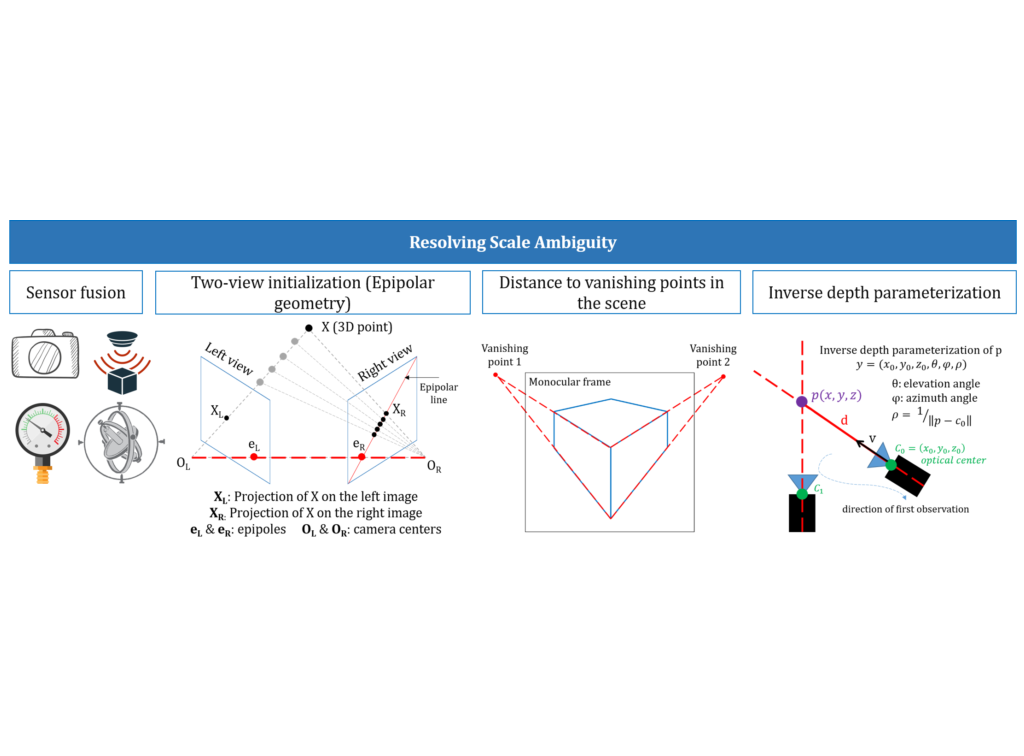

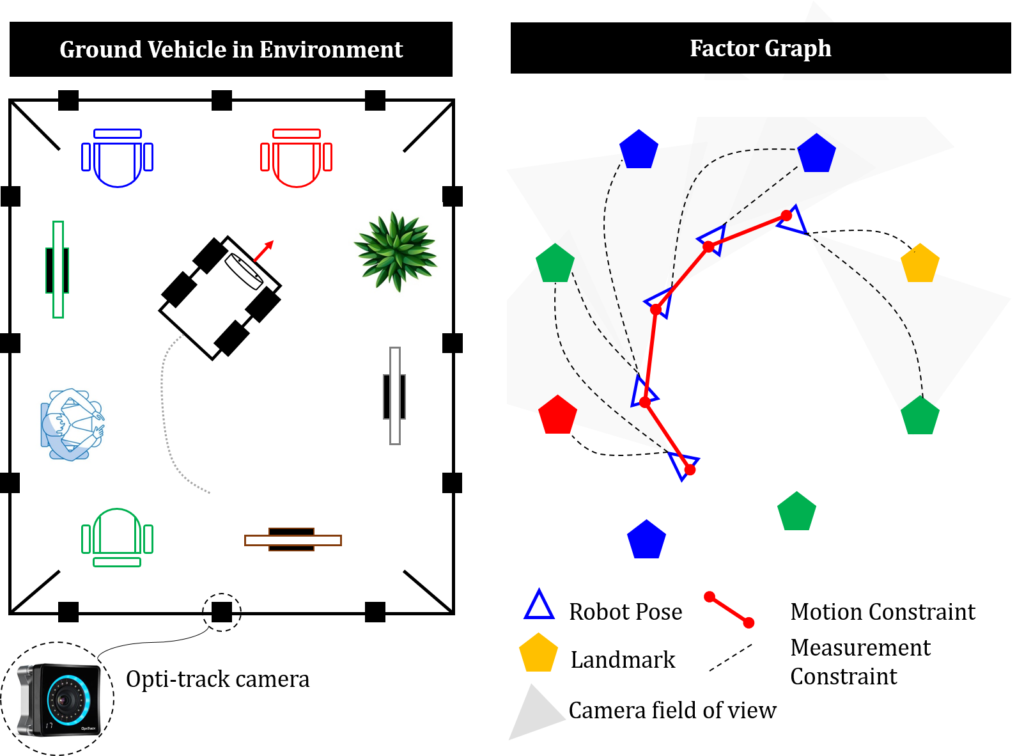

Simultaneous Localization and Mapping (SLAM)

This work focused on educing SLAM position estimation error by utilizing modern deep learning techniques and semantic scene understanding for a richer feature extraction.

Collaborators

- Prof. Shoudong Huang

- Prof. Yahya Zweiri

Students

- Rana Azzam

Relevant Publications

- A Stacked LSTM based Approach for Reducing Semantic Pose Estimation Error

- A Deep Learning Framework for Robust Semantic SLAM

- Feature-based visual simultaneous localization and mapping: a survey

Human Robot Interaction (HRI) in Assistive Robotics

The work focused on utilizing reinforcement learning to predict intentions during HRI for assistive robotics applications.

Collaborators

- Prof. Jaime Valls Miro

- Prof. Gammini Dissaniyake

Relevant Publications

- POMDP-based long-term user intention prediction for wheelchair navigation

- Intention Driven Assistive Wheelchair Navigation

- Wheelchair Driver Assistance and Intention Prediction Using POMDPs

- A POMDP framework for modelling human interaction with assistive robots

Intention prediction - wheelchair navigation

Intention prediction - wheelchair navigation

Path Planning and Navigation

The work focused on developing efficient and effective path planning algorithms for navigating robots in clutteredenvironments with narrow passages.

Collaborators

- Prof. Jaime Valls Miro

- Prof. Gammini Dissaniyake

Relevant Publications

- An Efficient Path Planner for Large Mobile Platforms in Cluttered Environments

- Sampling based time efficient path planning algorithm for mobile platforms

- An efficient strategy for robot navigation in cluttered environments in the presence of dynamic obstacles

- An adaptive manoeuvring strategy for mobile robots in cluttered dynamic environments